AI & Machine Learning - Technology & Ethics

Sondrel Tech Talks are an ongoing series of presentations for employees from thought leaders on the challenges that will shape the future of SoC design. It is one of the channels that champions a culture of knowledge sharing within the company.

Author: Tom Curren

Artificial Intelligence (AI) might seem like a far-off sci-fi concept, but it’s dominating mainstream news in 2018. Enzo D’Alessandro, a Hardware Engineering Manager at Sondrel, harbours a particular passion for machine learning, a key sub-set of AI. He recently shared his observations of the research and development in this field, as part of Sondrel’s ongoing internal Tech Talk series, and offered insights into the many challenges that the development of Machine Learning technology poses.

Governments, companies and the general population are all grappling with new technologies and their implications. Enzo, in his introduction, acknowledged the complexity of such developments and suggested that, “we are in a situation where technologies which are ignorant of law and ethics are being legislated by people who are ignorant of technologies.” This was not a question of politics, he explained, but ethics. Developing Machine Learning solutions is a ground upon which technology providers must tread carefully. The ethics regarding its applications walk a knife-edge, beneath which lie the potential rise of SkyNet and an apocalyptic PR disaster.

March was a timely month for such a talk. On the 17th, the Observer newspaper broke a story that would introduce the world to just one of the many complications of machine learning. A whistle-blower alleged that Cambridge Analytica, the British data analytics company with ties to both Donald Trump and the pro-Brexit campaign, had used personal data derived from millions of Facebook users to influence political voting. The resulting fallout caused Facebook’s stock value to plummet by $57billion in a week.

Just a day later, a self-driving car developed by Uber struck and killed a 49-year-old woman in Arizona. The SUV had a human backup driver behind the wheel, though the state’s governor had just, earlier that month, authorised the company to run tests without a safety operator at all. Arizona would later suspend tests on self-driving cars indefinitely.

These two stories, breaking just a day apart, are linked by the technology that drives them. Advances in artificial intelligence and machine learning have facilitated the development of software with the potential to, for example, self-drive an autonomous SUV in Arizona – at least in terms of intention, a relatively benign concept. But these advances are rapidly posing difficult questions, like those surrounding Facebook and data accessed by Cambridge Analytica.

As Enzo says, machine learning is “not just a technical exercise...it will affect our daily lives. There is something in the ethical dimension, other than just the technical dimension.” This ethical dimension is becoming more and more important for technology providers to understand as the demand for machine autonomy increases.

Facebook and Uber’s difficulties are a warning to other companies looking to explore the depths of machine learning. Though these events might seem like deterrents, enhancing AI systems with machine learning could change millions of lives for the better.

The key to successful machine learning, Enzo demonstrates, is context. “It’s all very well from a technical perspective if it [a self-driving car] works in a lab, but on the road you’ve got pedestrians, you’ve got cyclists, you’ve got people walking around and dogs suddenly jumping out,” Enzo said. “...so machines need to be context aware. From a machine’s perspective, a toddler moving along the road has got the same value as any other object moving across the road.”

This is a reference to another news story, albeit one slightly more historic. In July 2016, a security robot at a California shopping centre failed to stop when it knocked down a sixteen-month-old boy. The 300-pound robot proceeded to run over the boy’s leg, and would have continued if the toddler were not pulled away by his horrified father. The incident was a worrying reminder of how dangerous poorly-designed artificial intelligence might be. “The fact that it is a toddler, and therefore needs protecting, is context-specific information that’s not innate,” Enzo explained. “We need to somehow provide that information.”

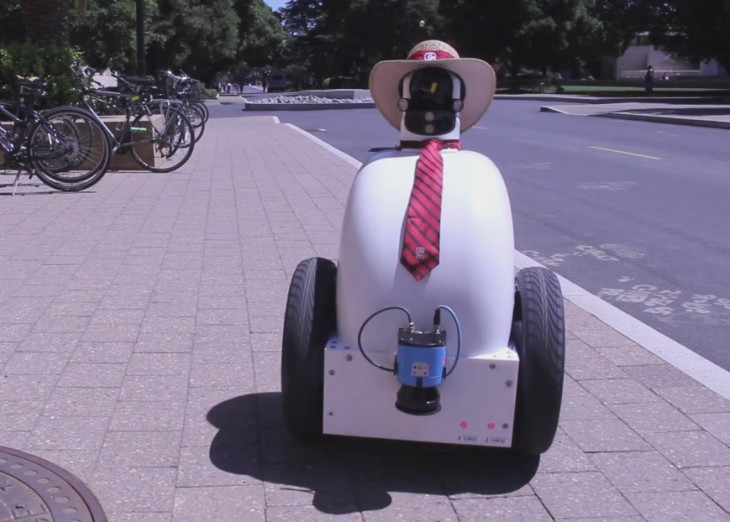

Providing this context is not easy. Enzo describes how engineers at Stanford University equipped the ‘polite’ robot Jackrabbot with enough context to navigate large pedestrian crowds. Though the task might seem menial, Enzo explains the sheer amount of processing the machine’s AI must do makes the challenge enormously complicated. “Humans use a lot of cues to navigate,” he said. “We look at each other’s faces, we look at each other’s eyes. You cannot do that as a robot. You don’t have the whites in the eyes.” Instead of these small behavioural cues, the engineers at Stanford sent up drones to watch crowds pass by below, feeding the data collected into Jackrabbit’s AI. From this, the robot learned how to pass through the crowd. It taught itself.

“They didn’t actually give him any rules,” Enzo explained. “They had hours and hours of drone footage, of people walking around, and Jackrabbot inferred the rules...that’s the critical thing. You don’t have to codify these rules. The rules can come out from actually observing what people are doing, what humans really do.”

So, as Enzo states: “The way you get artificial intelligence to work with machine learning is by providing lots and lots of datasets to train the system.” But acquiring enough datasets for the AI to learn adequately can be difficult and is an area where some tech firms have run into problems. Stanford University were free to fly drones to capture footage of crowds; but it isn’t always so straightforward. Enzo describes the tribulations of British start-up Metail, who launched in 2008 with the idea of creating an accurate body avatar for online shoppers, so customers could see how clothes fitted without having them to hand.

The problem was, the Metail system required sensitive pictures of the customers to create these avatars; pictures that proved unsurprisingly difficult to collect. Metail have since stepped back, employing a different system whereby you enter measurements – but the lesson was learned. Collecting enough datasets from the public can be difficult, morally ambiguous and lead to Facebook-Cambridge Analytica levels of fallout.

Collecting data is not the only ethical conundrum presented by machine learning. The ownership of this data is also an area for concern. “Who is in control of the datasets?” Enzo asks. “That’s a fundamental problem. The current situation is that datasets are generated by academics, and by companies...there’s a societal problem in this, in that the percentage of people from minority backgrounds represented in academia is way lower than people from the majority backgrounds.” To demonstrate the problem this raises, Enzo cites a study that shows how some facial recognition systems are accurate only for white, male faces. It’s clear that datasets could become a hugely powerful – and financially important – resource in the future: “There’s a lot of work making chips for artificial intelligence,” Enzo explains, “but maybe there’s actually more money in creating datasets and selling them.”

The challenges facing tech firms interested in machine learning are clear, and the possibility for a huge PR fallout is obvious. The ethical complications of such research, especially in regards to collecting datasets are myriad and varied, and how companies attempt to navigate such waters will define the future of the industry. As Enzo says, bringing technology and philosophy together might provide an answer in the future; a future that Sondrel is working towards every day.

For over 15 years Sondrel has been known for delivering quality, highly complex IC designs that have appeared in hundreds of leading-edge products, including those of the market leaders in mobile phones, security, network switches and routers, cameras, and many more. More recently, as applications have developed, we have worked on design engagements implementing machine learning technologies, products for virtual and augmented reality applications and supporting AI functionality.